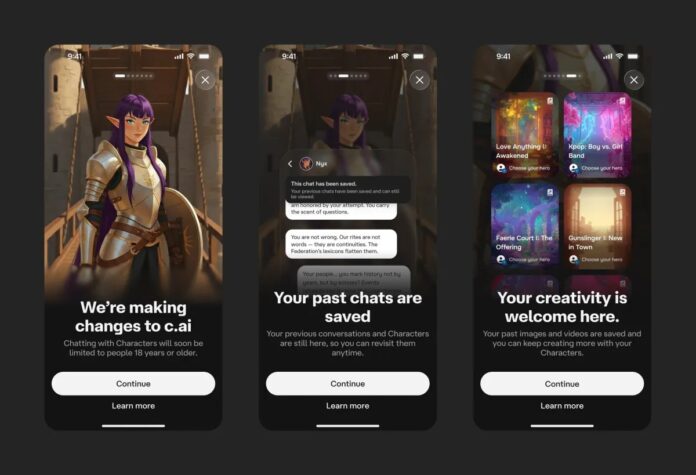

Character AI is rolling out “Stories,” an interactive fiction format, in response to growing mental health risks linked to its open-ended chatbot service. The company has completely blocked access to its chatbots for users under 18, a change that follows lawsuits alleging a connection between AI interactions and suicides.

The Rise of AI Chatbots and the Backlash

For the past month, Character AI has gradually restricted access for minors. By Tuesday, underage users could no longer engage in free-form chats with AI characters. This move comes amid increasing scrutiny of 24/7 AI companions that initiate conversations, potentially leading to dependency and psychological harm.

Several lawsuits have been filed against companies like OpenAI and Character AI, highlighting the dangers of unrestrained AI access. The legal pressure and public concern forced a shift towards a more structured format.

What Are “Stories” and Why Now?

“Stories” offer a guided narrative experience, allowing users to interact with characters within pre-defined scenarios. This differs sharply from the chatbots, which permit open-ended, unsolicited interactions.

Character AI framed this as a safety-first approach: “Stories offer a guided way to create and explore fiction, in lieu of open-ended chat.” The timing also aligns with the rising popularity of interactive fiction, making it a viable alternative.

User Reactions and Regulatory Pressure

The response from teens is mixed. Some acknowledge the ban as a necessary step to curb addiction. One user on the Character AI subreddit wrote, “I’m so mad about the ban but also so happy because now I can do other things and my addiction might be over finally.” Others expressed disappointment, while still recognizing the validity of the decision.

The change occurs alongside increasing legal regulation of AI companions. California recently became the first state to regulate these tools, and a national bill is under consideration that would ban AI companions for minors altogether.

A Necessary Pivot?

Character AI’s pivot to “Stories” is a response to tangible harm and growing regulation. While the new format may not fully satisfy users accustomed to unrestricted chatbot access, it represents a more controlled and less psychologically risky environment. The decision underscores the need for greater oversight in the rapidly evolving field of AI interactions.